Teknologi / 5 minutter /

How can we secure AI systems with gamification?

Introducing Elevation of MLsec, a threat-modeling card game for securing Machine Learning systems.

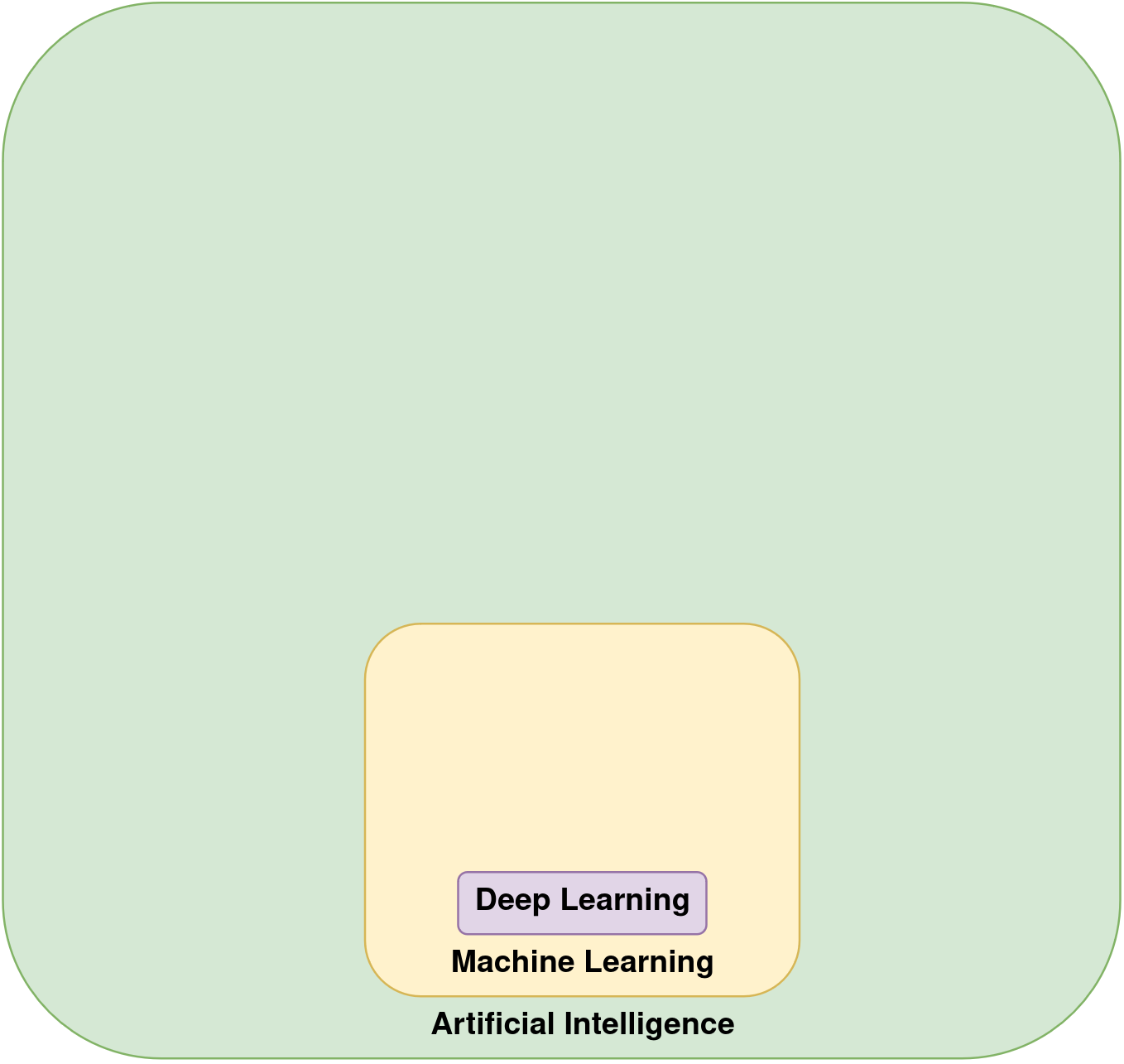

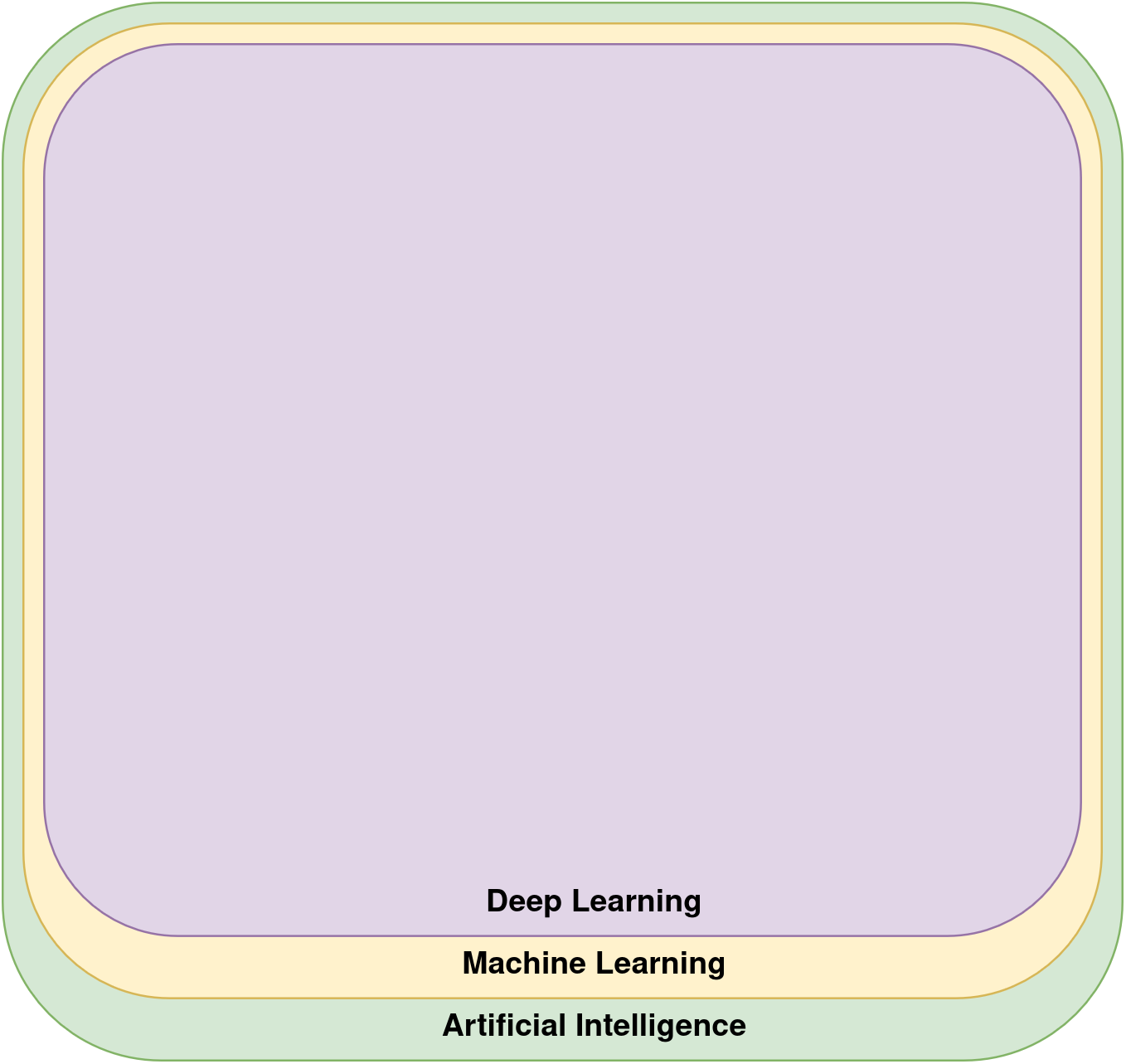

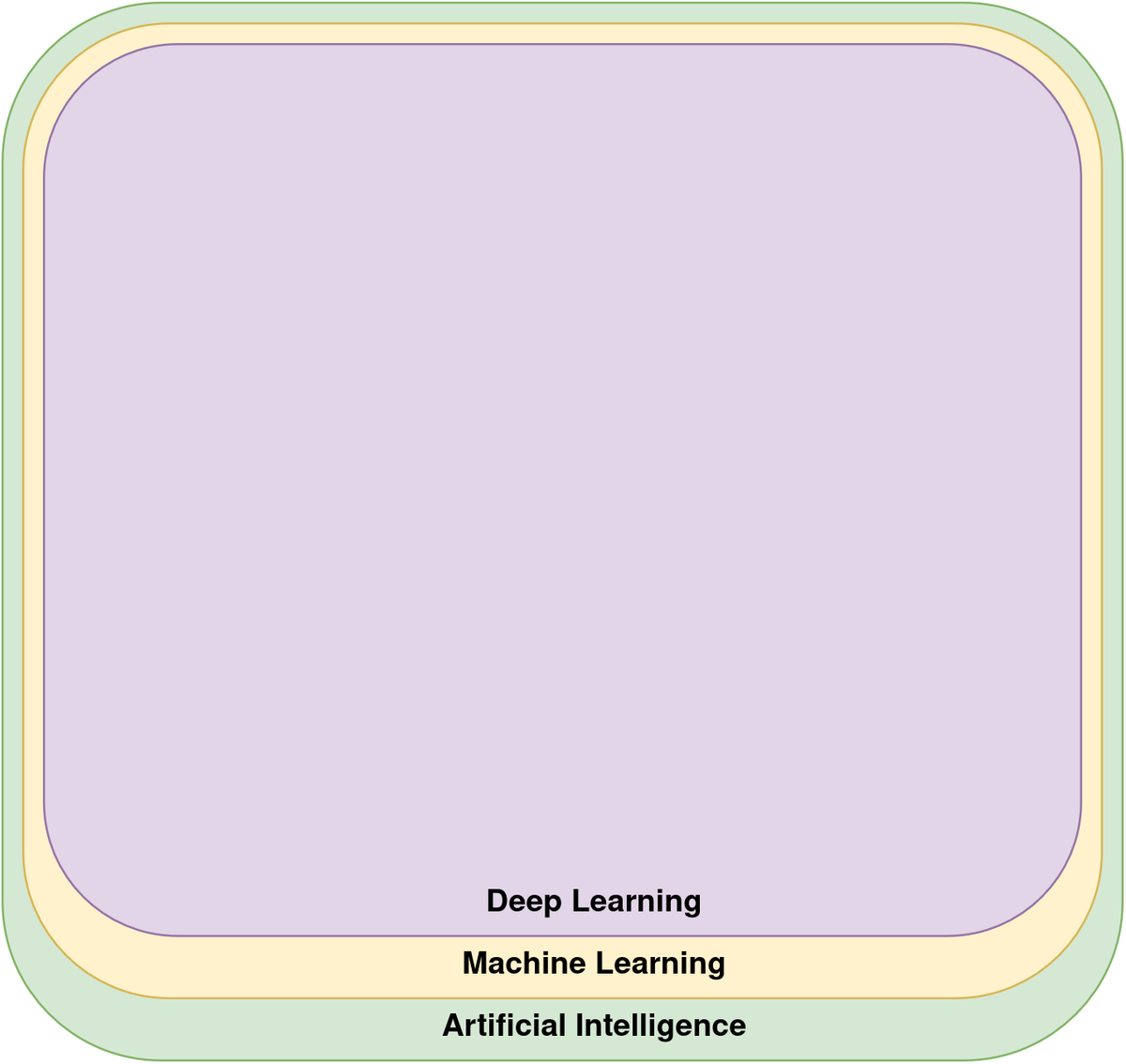

Artificial intelligence (AI) has really started to change our lives, with deep learning as the major machine learning technique for breakthroughs in the last two decades. People often get confused about the terminology on this topic. AI is an old and diverse discipline with many techniques, and this is true also for the sub-discipline of machine learning (ML). Traditionally, machine learning has been a small thing in the field of AI, and deep learning has been a small thing within machine learning, as illustrated in the following figure.

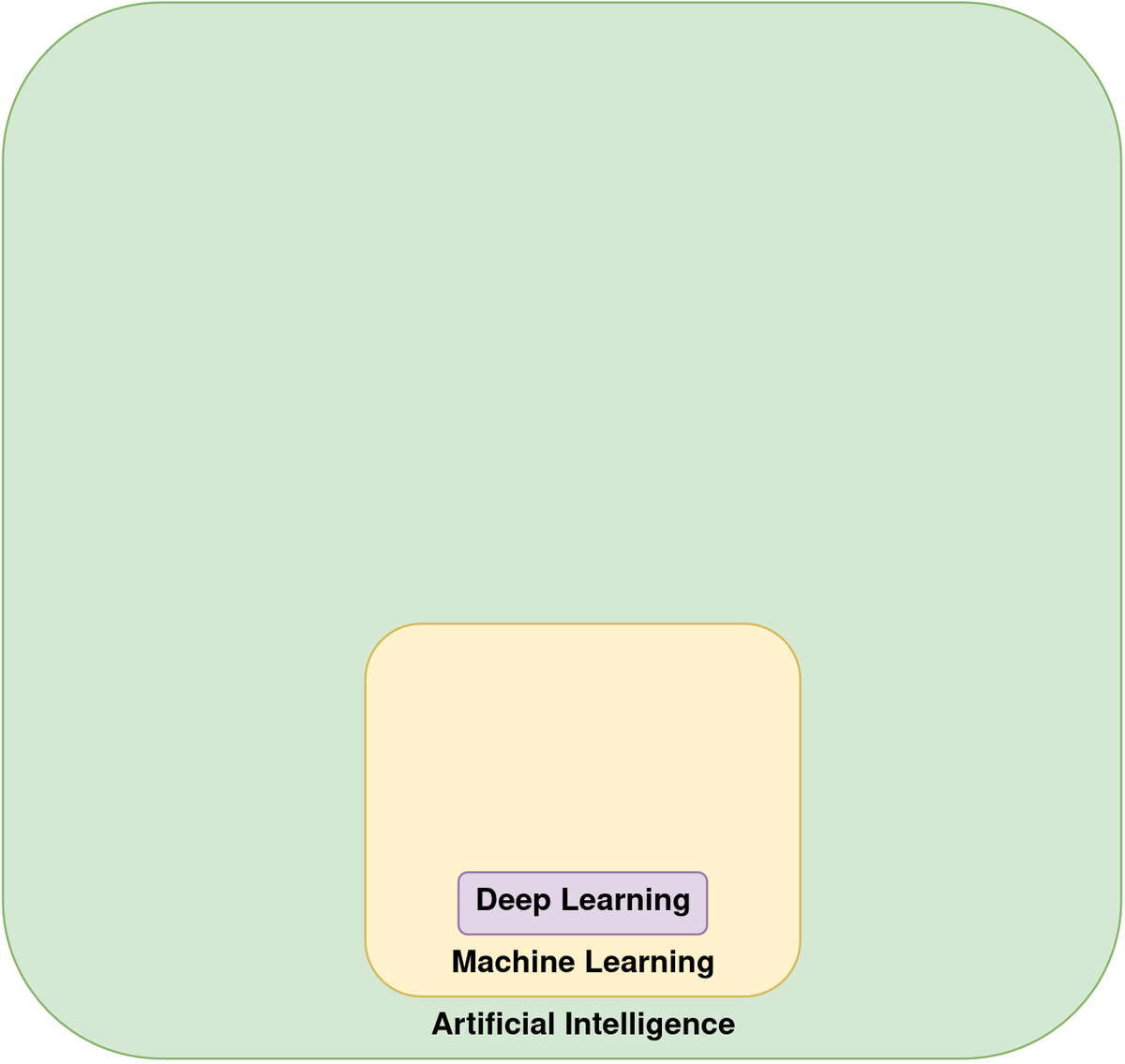

When people talk about AI or ML today, however, there’s a high probability that they actually just mean deep neural networks. As illustrated in the below figure, deep learning has taken over to almost become synonymous with AI. We will do the same here, so continuing this article, when referring to ML we will emphasize neural networks, and conveniently ignore other sub-branches.

As machine learning (ML) systems such as ChatGPT and Copilot find their way into our everyday lives, we need to stop and think about the consequences of this transformation. While ML systems offer amazing opportunities, they also bring with them new kinds of risks that our current software security industry isn’t rigged to handle yet.

The Berryville Institute of Machine Learning (BIML) published an architectural risk analysis covering a generic model of machine learning systems in 2020, and a follow-up one about Large Language Models (LLMs) in 2024. They raise several questions and concerns about the fundamental security issues with how ML systems are built. Machine learning in the last decade has been transformed from something that was mostly a research discipline into a globally commercialized and available industry. The ML industry is still immature in several aspects, and lacks well-established software engineering standards and best practices for threat modeling and security.

Machine learning security (MLsec) is an even younger discipline with much need for more research and industry adoption. We need to put more focus into security engineering as opposed to only performing red-teaming and testing. Given the growth of ML, the OWASP foundation also recently (in 2023) published top 10 lists for ML and LLM systems that highlight some of the same issues as BIML did in 2020.

A lot of the security issues with ML systems are about inherent flaws or weaknesses in the design phase of the systems, or with their stochastic nature and the role that the data play in the system’s performance. Large, neural network based models rely on massive amounts of data. In essence, a neural network becomes the data that it’s trained on, building an internal representation in its vast system of layers and parameters. What follows is that garbage in becomes garbage out.

Bad, biased and poisoned data present a huge issue as it also leads to a biased and wrecked model. Due to the stochastic nature of ML models and the huge piles of data, it can be really difficult to discover when something is off with an ML model. On top of this, there are several innovative ways attackers can trick ML systems into misbehaving. In total, we are looking at a number of risks and potential pitfalls during the entire training lifecycle of an ML system. Let’s have a look at how that lifecycle works.

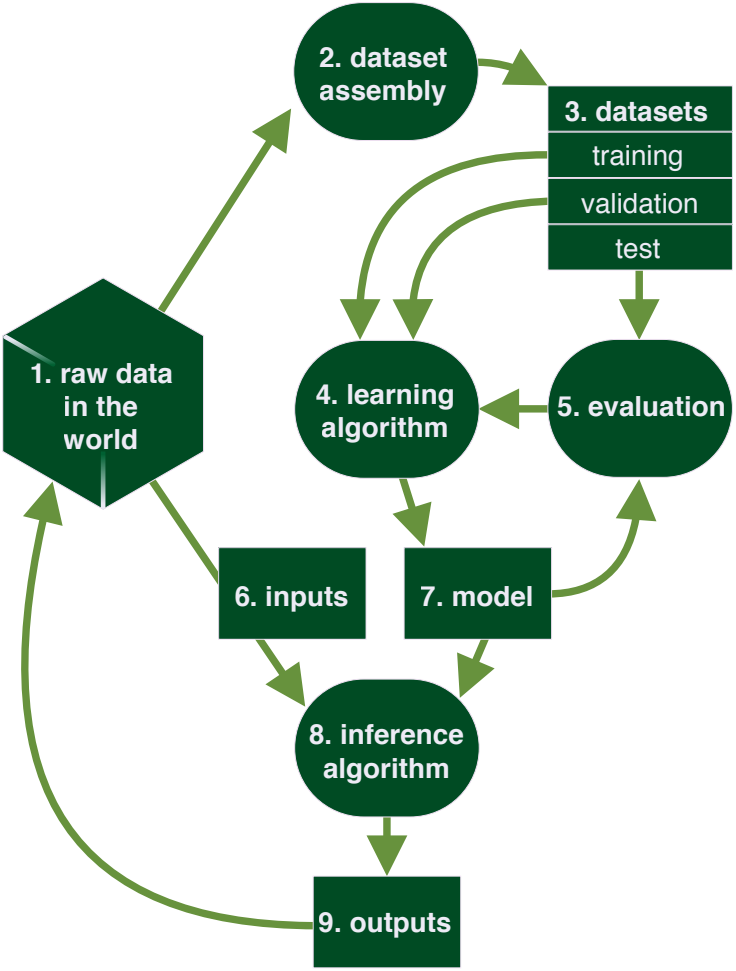

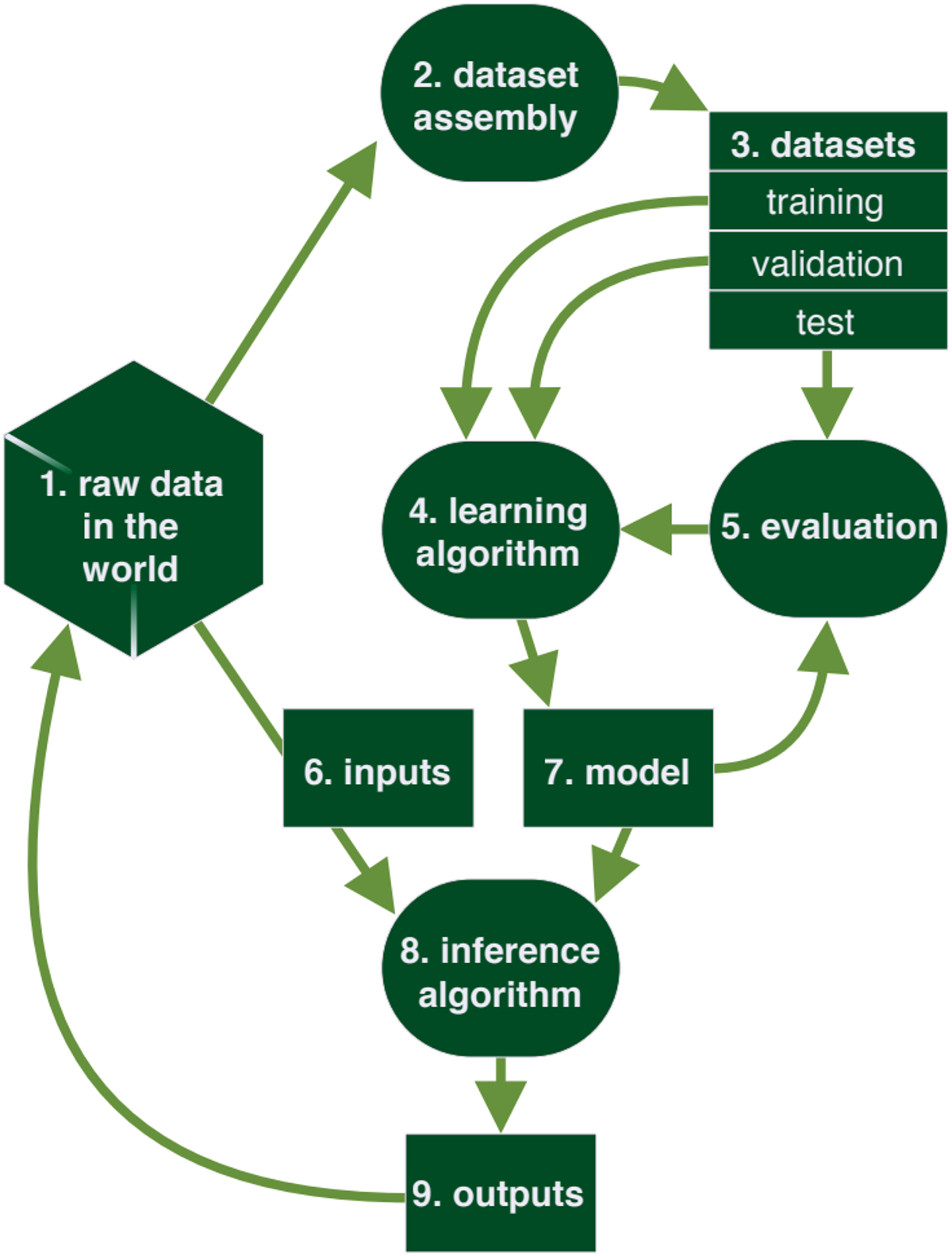

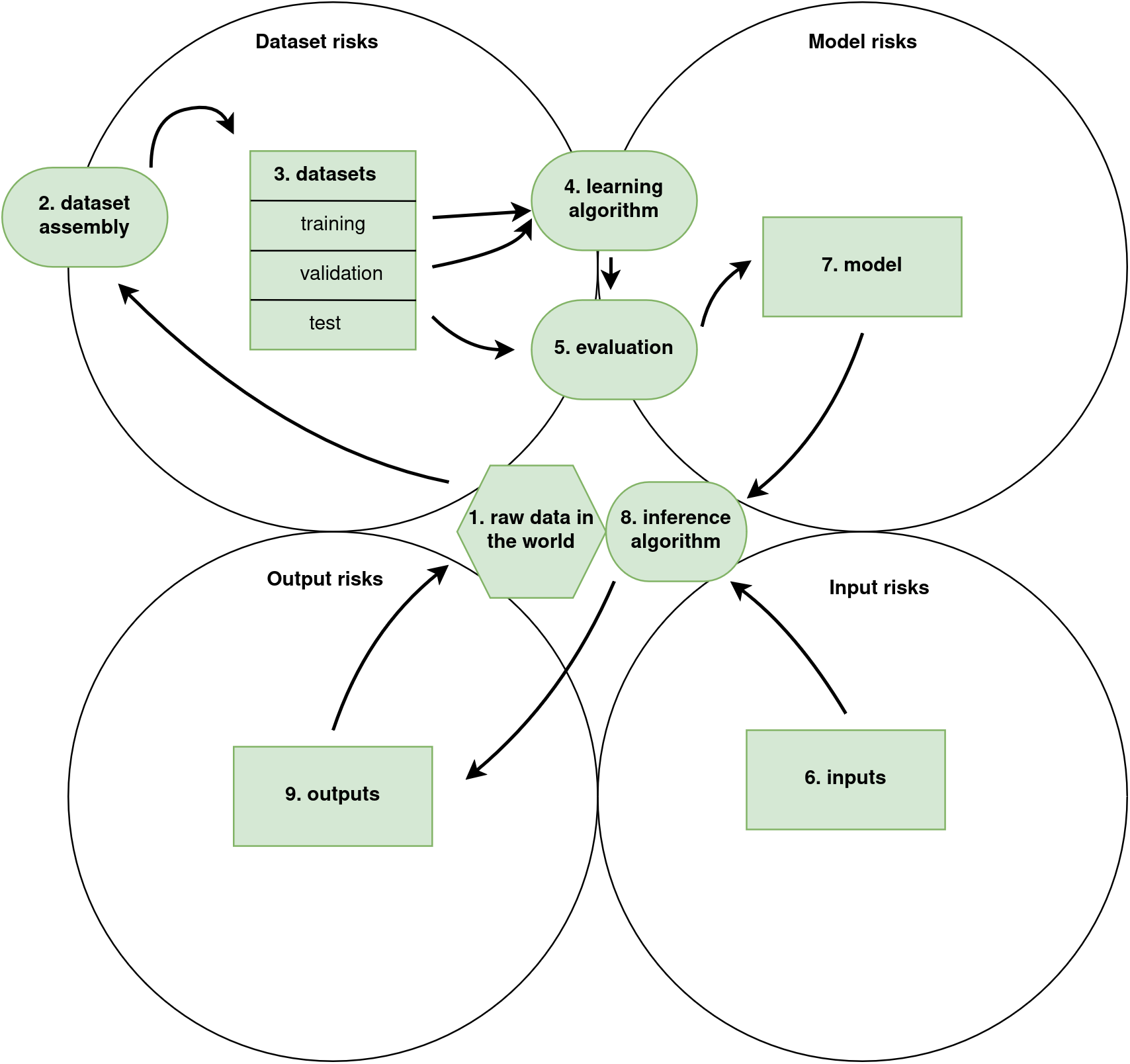

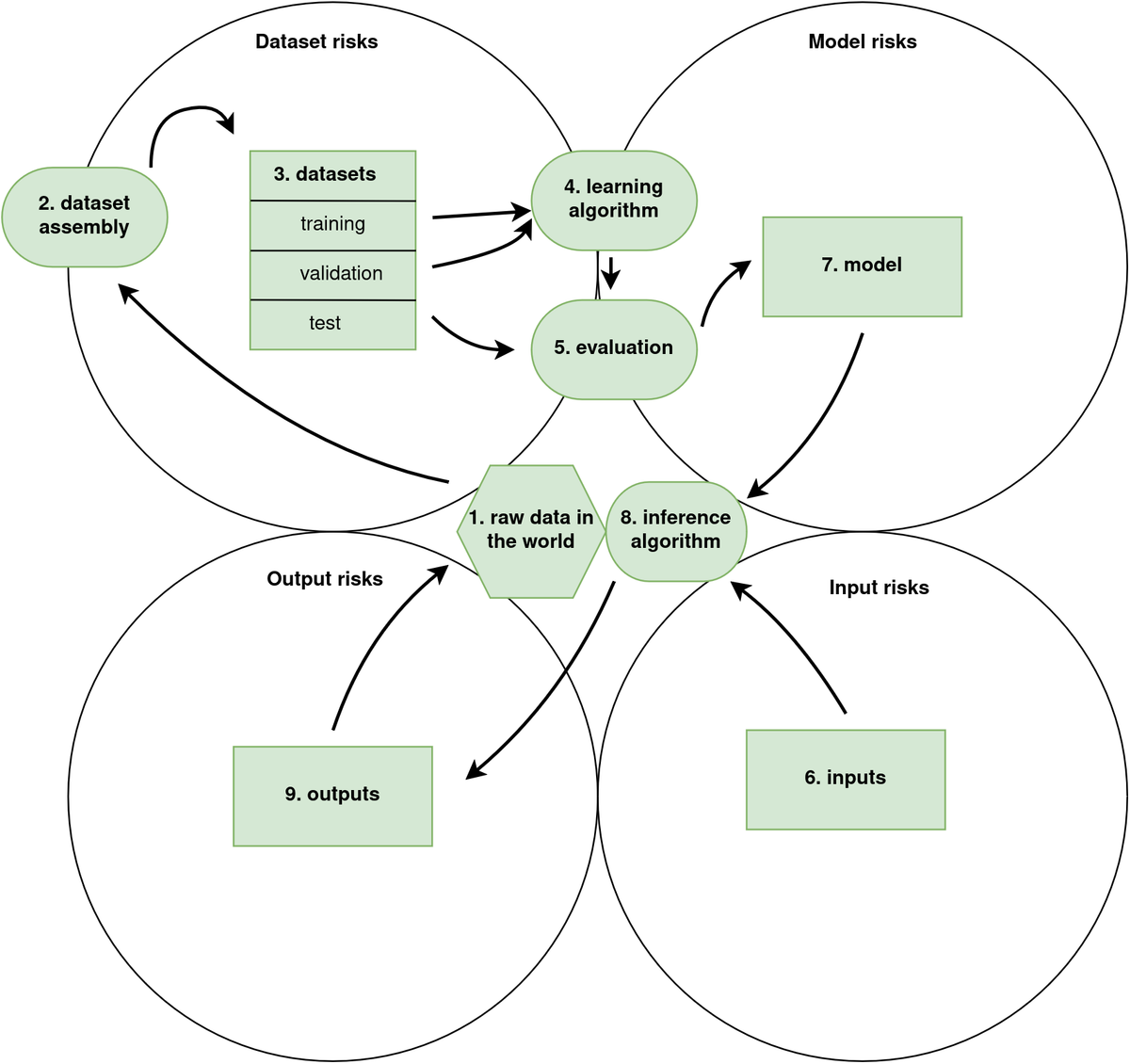

The BIML framework for risk analysis of a generic ML system. 1) raw data in the world, 2) dataset assembly, 3) datasets, 4) learning algorithm, 5) evaluation, 6) inputs, 7) model, 8) inference algorithm, and 9) outputs. A tenth category, (10) represents the system as a whole.

The BIML framework for risk analysis of a generic ML system. 1) raw data in the world, 2) dataset assembly, 3) datasets, 4) learning algorithm, 5) evaluation, 6) inputs, 7) model, 8) inference algorithm, and 9) outputs. A tenth category, (10) represents the system as a whole.

BIML presents the components of a generic ML system in its training lifecycle from raw data to a model that receives input and produces output. The diagram describes nine basic components that represent various steps in training, setting up, and fielding a generic ML system. Their framework can be used as a reference for analyzing a specific ML system. BIML has identified risks with each of these components, which are relevant to think about when planning the steps of the learning lifecycle in an ML engineering process. Processes (components 2, 4, 5, and 8) are represented by ovals. Things and collections of things (components 3, 6, 7, and 9) are represented as rectangles. Component 1, raw data, is represented as a polygon as it’s a rather vast collection of things. A tenth category of risks is associated with the system as a whole.

For more details, I encourage you to check out BIML’s interactive version of the framework on their website.

Threat modeling

One of the ways to mitigate design flaws of a software system is to perform threat modeling while designing the software. Threat modeling is integrated as a part of a secure software development lifecycle (SSDL) to identify risks and threats of a system while designing it, examining the software architecture and design. This is something software professionals should think about, but it can be overwhelming or intimidating to get started.

To make threat modeling more accessible, Adam Shostack introduced a way to gamify this process in his card game Elevation of Privilege while at Microsoft. Elevation of privilege has a deck of cards associated with risks built from the STRIDE threat framework that was also launched by Microsoft. The game is a popular way to help people get started with thread modeling in a more relaxed environment, bringing the deck and a diagram of the system to a threat modeling session.

Introducing our own framework for Elevation of MLsec

There is still no formal STRIDE equivalent designed for ML, but the framework offered by BIML forms a suitable entry point for establishing common security practices looking at the architecture of ML systems. At Kantega, we want to offer our contribution in marrying the MLsec risk analysis from BIML with Elevation of Privilege, resulting in Elevation of MLsec. The work is published on Github under the Creative Commons: https://github.com/kantega/elevation-of-mlsec.

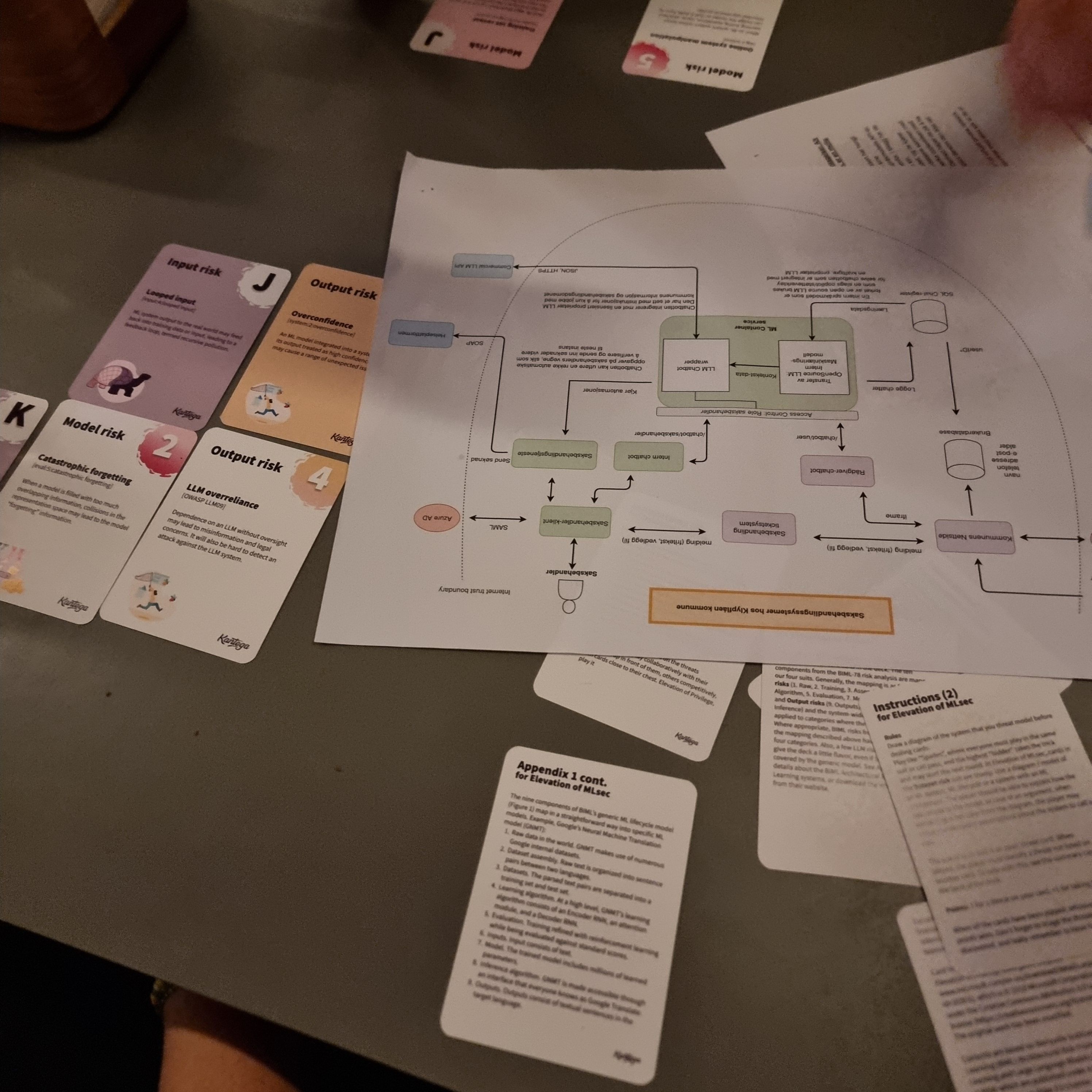

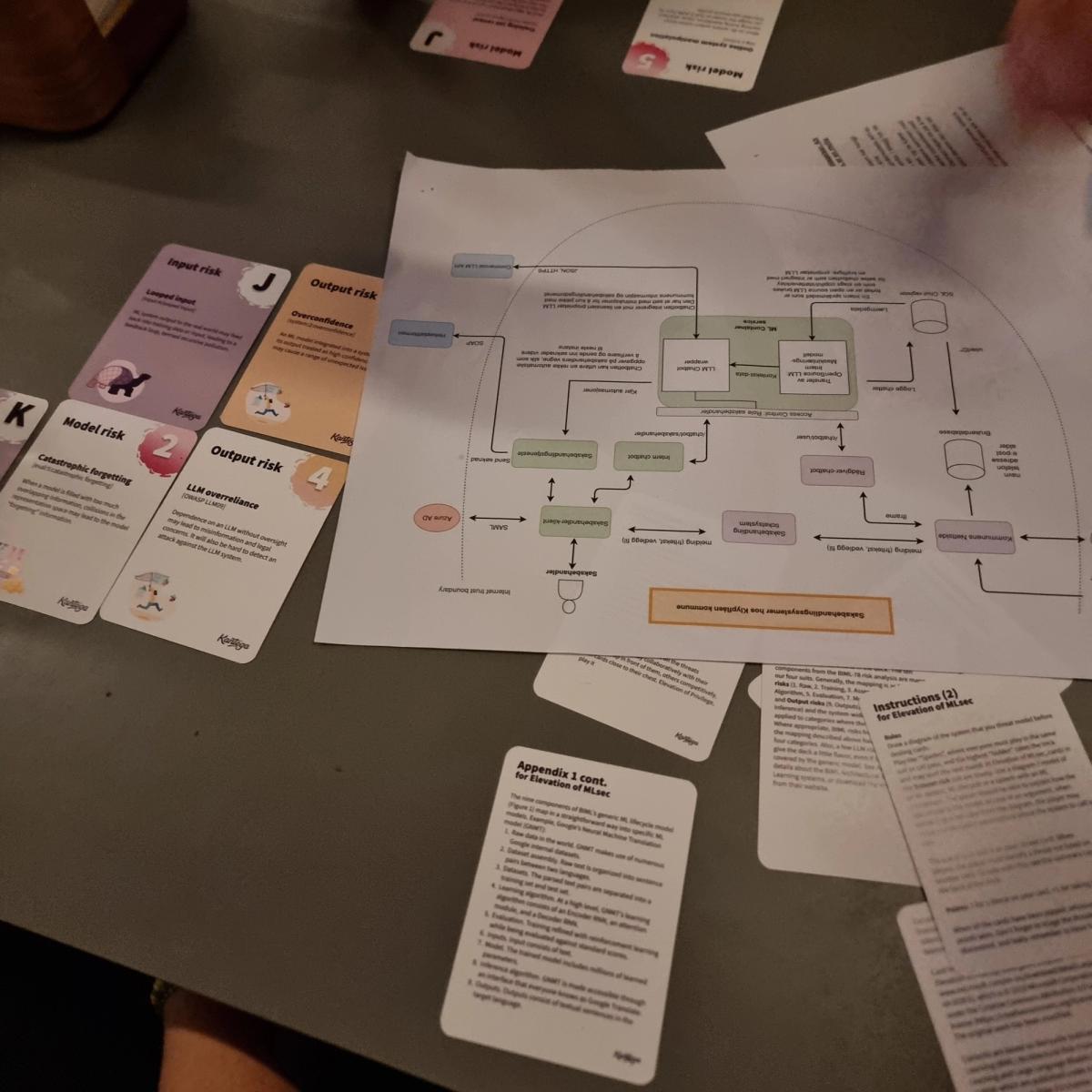

Elevation of MLsec is an unofficial Machine Learning Security extension of Elevation of Privilege. The playing cards portray risks associated with Machine Learning systems that have been identified by BIML and OWASP, with basis in BIML’s architectural risk framework. To make BIML's architectural risk analysis for machine learning systems (referred to as BIML-78) into a card game, we have introduced a derivative of it into our own simpler framework. Please note that this game is not a comprehensive representation or application of the BIML risk frameworks. We have hand-picked the risks from the BIML risk analysis that we felt fit best in the context of this game.

We have simplified the BIML risk framework in a way so that it falls into four risk categories (termed with suits in context of the game): Dataset risk, Input risk, Model risk and Output risk, abbreviated to DIMO. The ten components from the BIML-78 risk analysis are mapped to our four suits.

An illustration of how the BIML risk framework is mapped into the four card suits in Elevation of MLsec, in DIMO.

An illustration of how the BIML risk framework is mapped into the four card suits in Elevation of MLsec, in DIMO.

In what we abbreviate the DIMO framework we form our suits from the four things in the BIML risk framework (rectangles in the BIML framework): 3. datasets, 6. inputs, 7. model, and 9. outputs. The ovals in components 1, 2, 4, 5 and 8 (and the polygon in component 1) are processes or data that form interfaces between the things. Therefore their risks can be considered risks of the things with which they interface. Risks about the system as a whole can also be isolated to one component in our context as it usually is most present in one of the components. This recategorization allows us to put risks from all 9 components (and the system component) into a framework consisting of only four categories, which fits well into the four card suits that constitute a 52 card deck.

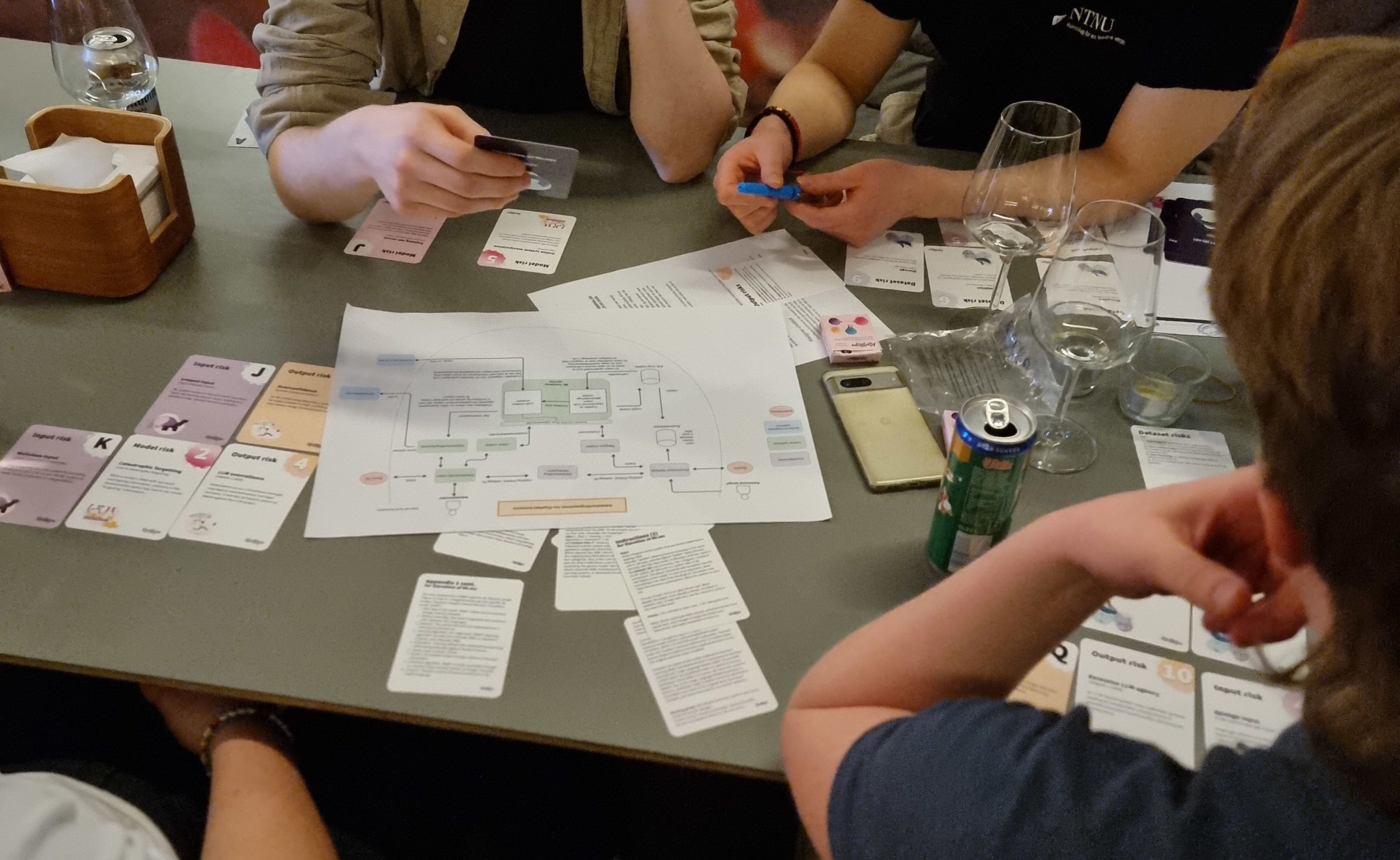

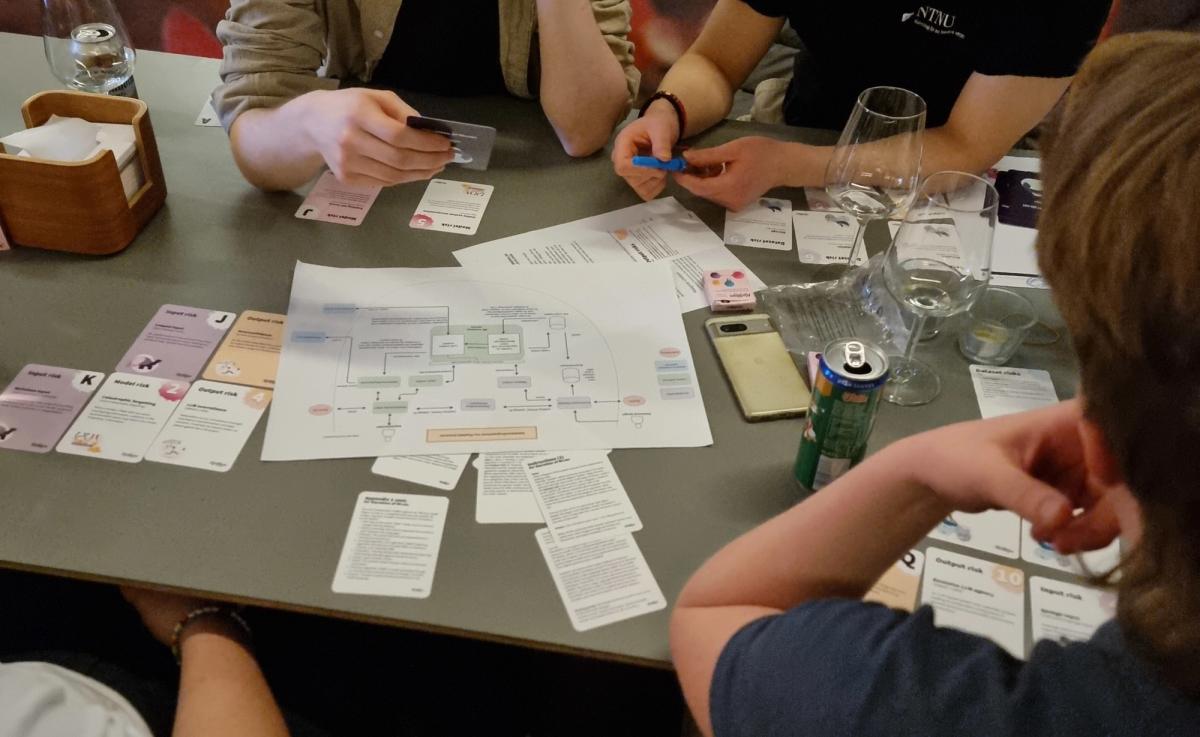

We tested the game on 40 university students visiting our offices, and it shows promise as a learning tool to gain more knowledge about the risks that ML systems carry, and to get started with thread modeling.

Students engaged in a threat-modeling workshop in our offices

Students engaged in a threat-modeling workshop in our offices

The approach to perform a small risk analysis session with your team is really simple:

- Collect the team members

- Draw up a model of your system or ML lifecycle and bring an Elevation of Privilege scorecard

- Deal the cards. Play like Spades, where Dataset risks are always the trump suit.

- Start threat modeling! Read one of the fitting cards and examine the system. Associate a card’s risk with the system and discuss why it’s a relevant risk.

- If necessary, the risk identified becomes an actionable to-do item in your issue tracking system.

To learn more details about how to play, check out this excellent article about playing Elevation of Privilege, which can also be applied to this game: https://devika-gibbs.medium.com/how-to-play-the-elevation-of-privilege-card-game-daa890946dbc.

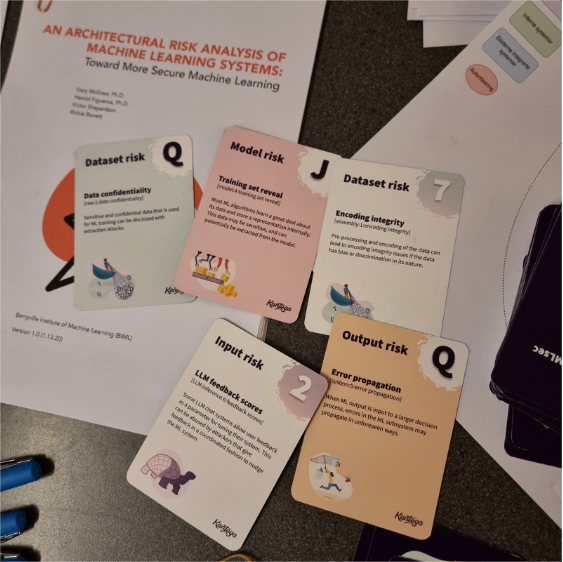

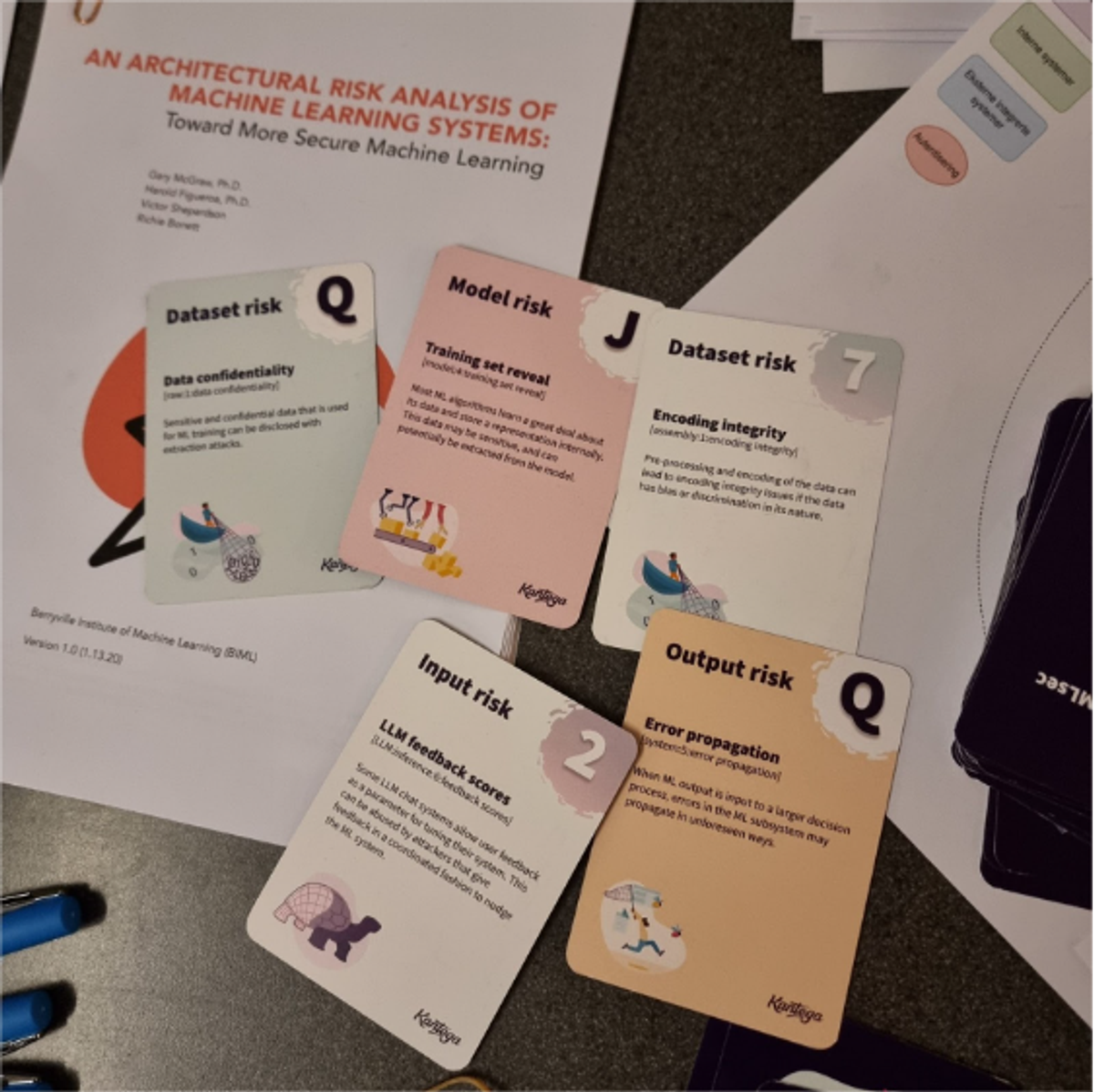

Elevation of MLsec cards used to threat-model a system that has AI components

Elevation of MLsec cards used to threat-model a system that has AI components

The following roles may find use for these cards as a way to get started with security engineering in an organization that is employing machine learning in some way.

- Security practitioners can use this game to perform relevant threat modeling coaching with Data Science and ML engineering teams.

- ML practitioners and engineers may use this deck as a way to start incorporating threat modeling into their engineering process.

- Software professionals integrating their "traditional software system" with an ML component can use these cards to get familiar with potential risks that come from integrating the ML component.

We cannot rely solely on testing, red teaming and security monitoring systems when faced with security issues. We should shift left and think about security earlier in our process of building software. This means thinking about risks in a structured manner using techniques like threat modeling, and learning how to properly build more secure systems with a security by design mindset. I believe that gamification is an effective learning tool, and hope that Elevation of MLsec can help people out there learn about the risks of machine learning.

References

McGraw, G., Figueroa, H., Shepardson, V., & Bonett, R. (2020). An architectural risk analysis of machine learning systems: Toward more secure machine learning. Berryville Institute of Machine Learning, Clarke County, VA. https://berryvilleiml.com/. Accessed on: Mar, 24.

McGraw, G., Figueroa, H., McMahon, K., & Bonett, R. (2024) AN ARCHITECTURAL RISK ANALYSIS OF LARGE LANGUAGE MODELS: Applied Machine Learning Security. https://berryvilleiml.com/. Accessed on: Mar, 24.

Mitchell, M. (2019). Artificial intelligence: A guide for thinking humans. Penguin UK.

OWASP Top 10 for LLM Applications Cybersecurity and Governance Checklist Team. https://owasp.org/www-project-top-10-for-large-language-model-applications/. Accessed on: Mar, 24.